MusicAtlas is a music intelligence platform that transforms audio, lyrics, and contextual signals into a searchable, referenceable system. Rather than relying on manual tags or closed recommendation feeds, MusicAtlas enables open discovery across platforms, catalogs, and workflows.

Conceptually, MusicAtlas applies a search-engine approach to music, similar to how web search engines model and rank relationships across large collections of pages. Instead of links, MusicAtlas focuses on relationships based on sound, meaning, and context.

MusicAtlas relationships map.

At its core, MusicAtlas is designed to answer a simple question: how does music relate to other music, and how can that understanding be searched, explored, and reused anywhere?

Analyze sources. MusicAtlas analyzes audio, lyrics, metadata, and contextual signals to derive structured representations that describe how music relates across sound, meaning, and usage — without functioning as a streaming or storage system.

Apply multiple models. Rather than assuming a single “best” model, MusicAtlas uses multiple complementary machine-learning models to capture sonic similarity, lyrical themes, and contextual relationships.

Index representations. These representations are organized into a multi-representation search index designed for fast, flexible querying — enabling similarity search, lyric-driven discovery, and exploration across large music collections.

Learn relationships. Relationships across the index are continuously refined to improve relevance, context-aware similarity, and discovery as the system evolves over time.

Deliver intelligence. Results are delivered through search interfaces, APIs, and products built on the platform, with outputs designed to integrate into workflows and resolve across listening destinations.

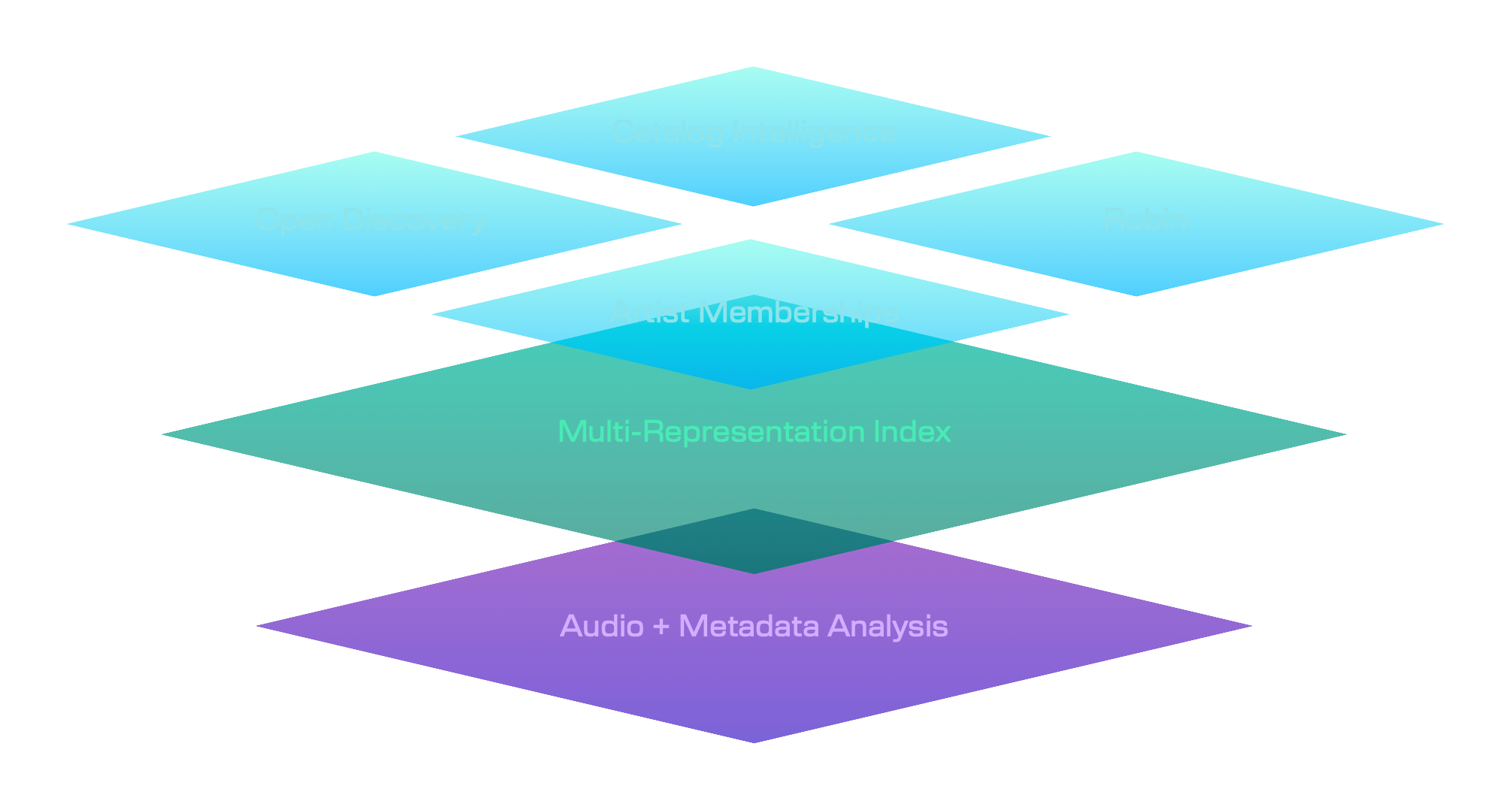

A simplified view of the MusicAtlas platform architecture.

Most music discovery systems are closed by design. They optimize for engagement inside a single platform and limit discovery to what benefits that ecosystem.

MusicAtlas is built for open discovery — prioritizing understanding, searchability, and reuse across platforms rather than retention inside one app.

Fans exploring music beyond algorithmic feeds.

Industry teams searching and understanding large music libraries.

Music supervisors and editors discovering non-obvious matches.

Developers building products on top of music intelligence.

A streaming service.

A closed recommendation feed.

A tag-only classification system.

A replacement for systems of record.

MusicAtlas works by indexing music the way search engines index the web — through layered analysis, continuous learning, and open access to results. In practice, it becomes the connective tissue between music, understanding, and discovery — wherever music is used.