MusicAtlas is music search infrastructure built on multi-model analysis across audio, lyrics, and context — transforming recorded music into a system that can be searched, explored, and reused across workflows and platforms.

The use cases below show how different teams apply music search in practice — from discovery and research to editorial exploration, catalog intelligence, and creative matching.

Reduce search time and increase accuracy for “sounds like” and lyrical intent matching — across cleared catalogs.

Search your catalog by sound, lyrics, and context to support strategy, pitching, and discovery across the roster.

Drive operating leverage across catalog portfolios with faster workflows, automation, and measurable discovery intelligence.

Understand positioning and creative adjacency to guide releases, collaborations, comps, and narrative framing.

Discover non-obvious artists and tracks using reference-track search, lyric themes, and intent-driven exploration.

Explore similarity and discovery context for your own music — and generate editorial-ready positioning and pitch materials.

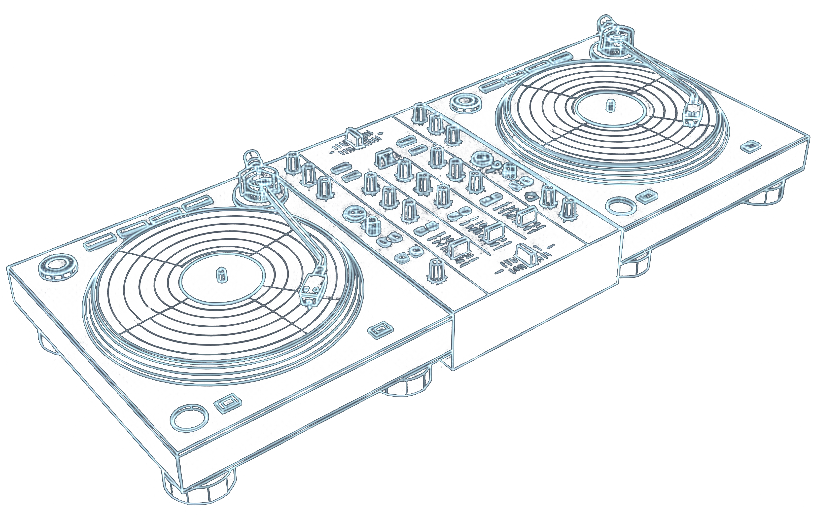

Build sets faster by finding adjacent tracks by groove, energy, era, and texture — then export to your listening destination.

MusicAtlas applies multi-model analysis across audio, lyrics, and context — so you can search recorded music by how it sounds, what it means, and how it relates.

→ "Find tracks that feel like early LCD Soundsystem."

→ "Show me songs about starting over, without being too literal."

→ "Search only my catalog for cleared, cinematic alt-pop for a trailer."

We work with listeners, artists, and industry teams to make music more searchable, more discoverable, and more reusable. If you want to explore a workflow or integrate MusicAtlas into your systems, we’d love to talk.

Get in Touch